02 Jan Is Language a fundamental requirement for AGI?

Looking to 2024’s innovations

The age of language models is here (sorry to state the obvious). 2023 saw the advent of easy-to-use LLMs, and the rise of their pre-existing underlying enabling technologies – transformers and retrieval in particular – which transformed the AI landscape in a way most of us probably didn’t expect.

The sheer acceleration of development and adoption has been nothing short of amazing. Where previously in boardrooms and management there was hesitance and suspicion on the same level as one might see with blockchain and cryptocurrencies, there is now a real interest in actual adoption and serious discussions of how products like ChatGPT can directly benefit them. Generative AI is approaching similar hype to “Big Data” of bygone years. And coming into 2024, this is now leaking into image and video generation.

OpenAI is obviously a big reason why we are where we are, but what is it about ChatGPT that has actually driven this phenomenal growth? You might say it’s the creepy ability to credibly mimic a human response or write whole articles and blog posts (not this one!). Or its ability to write junior level boilerplate code.

I think it’s these things, and more – but more fundamentally I think it’s something even simpler.

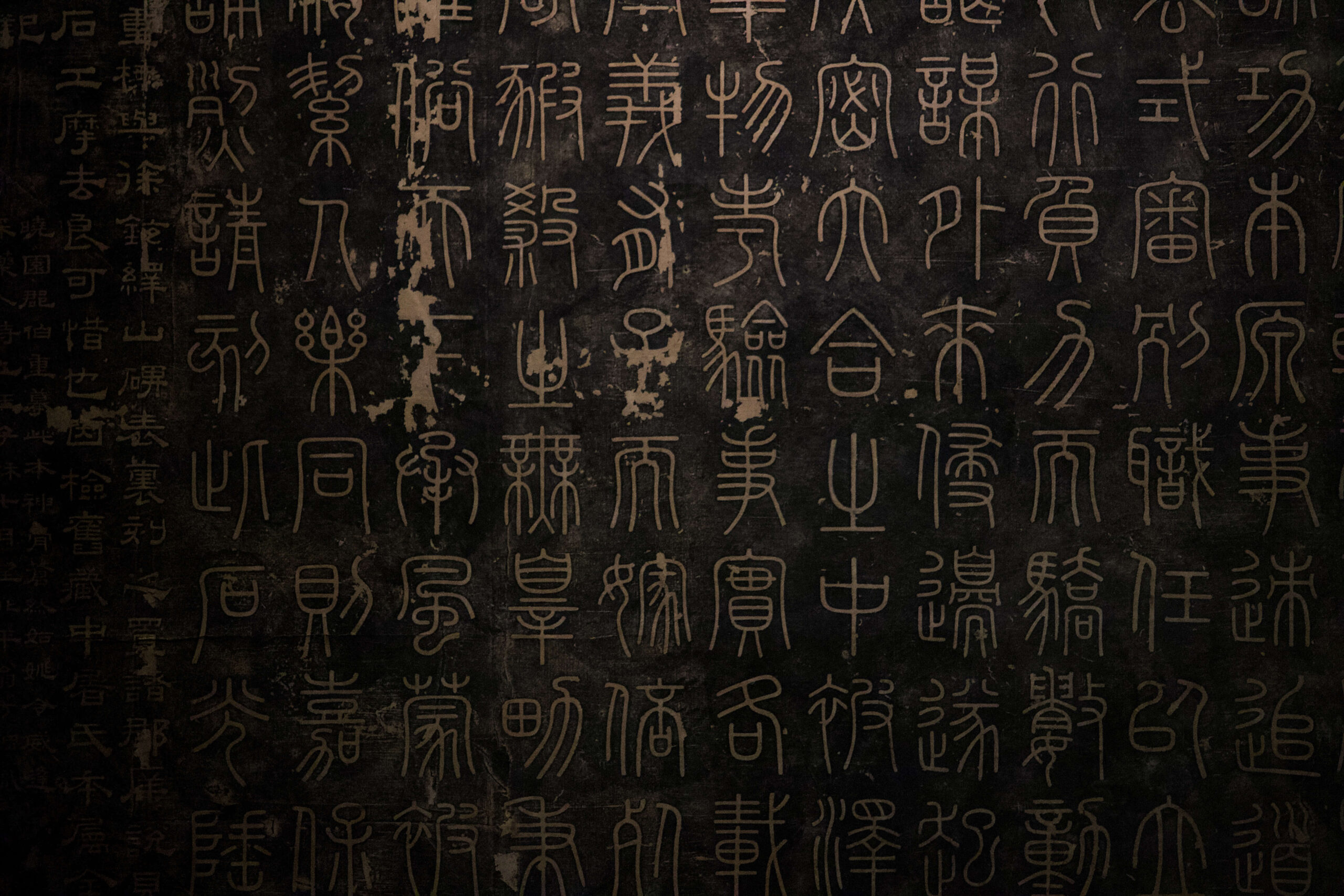

Natural language. Rewind only two years, and whenever you found yourself in a conversation about AI at the back of your mind there would be images of geeky coders behind screens in dark rooms doing some mind boggling maths in R or Python. The outputs were numbers, vectors, true/false, classification… and NLP was about processing language.

Now? IIt’s natural language generation.

LLMs deal with humans in our fundamental currency of communication – language. Obvious point, but if a system is talking to you in natural language, instead of 1s and 0s or matrix maths or Python code, it is far more relatable. Remember the computer on the starship Enterprise? Same energy.

I think there is a lot of hype about LLMs that is likely to plateau in 2024. There may well be innovation in language construction, or network reduction / efficiency gains – all of which have far reaching implications to applications outside of LLMs. But 2023 really was the year of LLMs, whereas 2024 will likely be the year of other types of generative models beyond language.

AGI and the LLM Hype

I’m going to get sniped for creating a simplified definition of AGI here, but when I say “AGI” I mean an AI that thinks for itself, has its own wants and plans/builds “models” (how it interprets and reacts with the world) internally without human intervention. It actually understands its world, it doesn’t simply memorise and recall.

I don’t believe I have to keep saying this, but LLMs do not understand the world.

I do not equate AI with its ability to mimic a human, and an English-speaking one at that. Not only is that a bit egotistical (who says that’s the epitome?), it doesn’t follow that to have those above mentioned qualities you have to be human-like or English-speaking.

Have a look at some of the articles in the press about AI taking over the world – and how that has sprung almost entirely from ChatGPT.

- The Telegraph – Meet ChatGPT, the scarily intelligent robot who can do your job better than you.

- The Daily Mail – AI bots like ChatGPT could herald ‘an alien invasion’ with the capacity to ‘wipe out humanity’, top AI professor warns.

The debate of the veracity of these claims can be had another day… What is interesting is this: people are only waking up to the potential (note, not using the word threat) of “AI” now that it is relatable. You can converse with it, argue with it, even joke with it. Sure, sometimes it trips up but so does a 12 year old.

You are interacting with it in language.

But that very same language is a human construct, not an AI-generated one. OpenAI and others are spending inordinate amounts of cash on optimising models to speak a language that we can all relate to…mostly English for now, but don’t be surprised when you see Chinese or Hindi alternatives.

However the possibility of creating AGI is not a function of how it communicates with humans. That is just the output! The intelligence is internal – created and processed before the output is even generated.

Do you think in languages or a construct more abstract than language? You might yield to language in your thinking eventually, especially when you’re writing an email for example, but when you’re thinking about a topic you are imagining world models, or in abstractions… which has nothing to do with language until it is articulated by a higher brain function.

How can AGI be achieved without being shackled by language?

Language, whilst nice for us humans, is ironically creating an artificial hurdle and sidestory in our pursuit of AGI. It simply isn’t necessary for the core process of AGI to be achieved. Language generation, however, is necessary for humans to interact with AGI, unless companies like Neuralink can figure out how to tightly integrate a human brain with an artificial system.

There are few things still fundamentally missing in our knowledge of how to build true AI systems.

The most fundamental thing in my opinion is the ability to create models of the world at speed with few training data points, and then have those models merge into the universal model space. In other words – build small models that integrate together. I wrote a book about this years ago, which is the basis of our ideas of multimodal AI at Jiva. Without this fundamental requirement, I believe we’ll be stuck with building ultra large models, ever increasing in size over time. The underlying implication is that we have to move to alternative architectures – away from perceptron techniques, which do not lend themselves to model merging.

Once we have got over the fact that language isn’t a fundamental requirement to figure out the process of AGI, we can free up bandwidth to actually construct the mechanisms that might enable an artificial system to build world models – abstractions of thought – at its own whim and will. That is when I think we’ll reach the age of AI.

Also published to Medium.