21 Feb How Will the EU AI Act Impact You?

EU AI Act

Developed by the Organisation for Economic Co-operation and Development (OECD), the EU AI Act is the first legislative proposal of its kind in the world and supports a global consensus around the types of systems that are intended to be regulated as Artificial Intelligence. The EU AI Act is expected to set certain standards for AI regulations in other jurisdictions e.g. the UK’s AI Regulation that’s currently at consultation stage.

Regulatory Landscape

According to Deloitte, the AI Act will cover not only EU companies but also entities from abroad that provide AI services to EU citizens. Undoubtedly, this increases the prominence of the Act and serves as a reminder for companies across the globe that they must enhance their AI practices and adhere to the EU rules, notably as the Act also brings the prospect of significant fines for non-compliance.

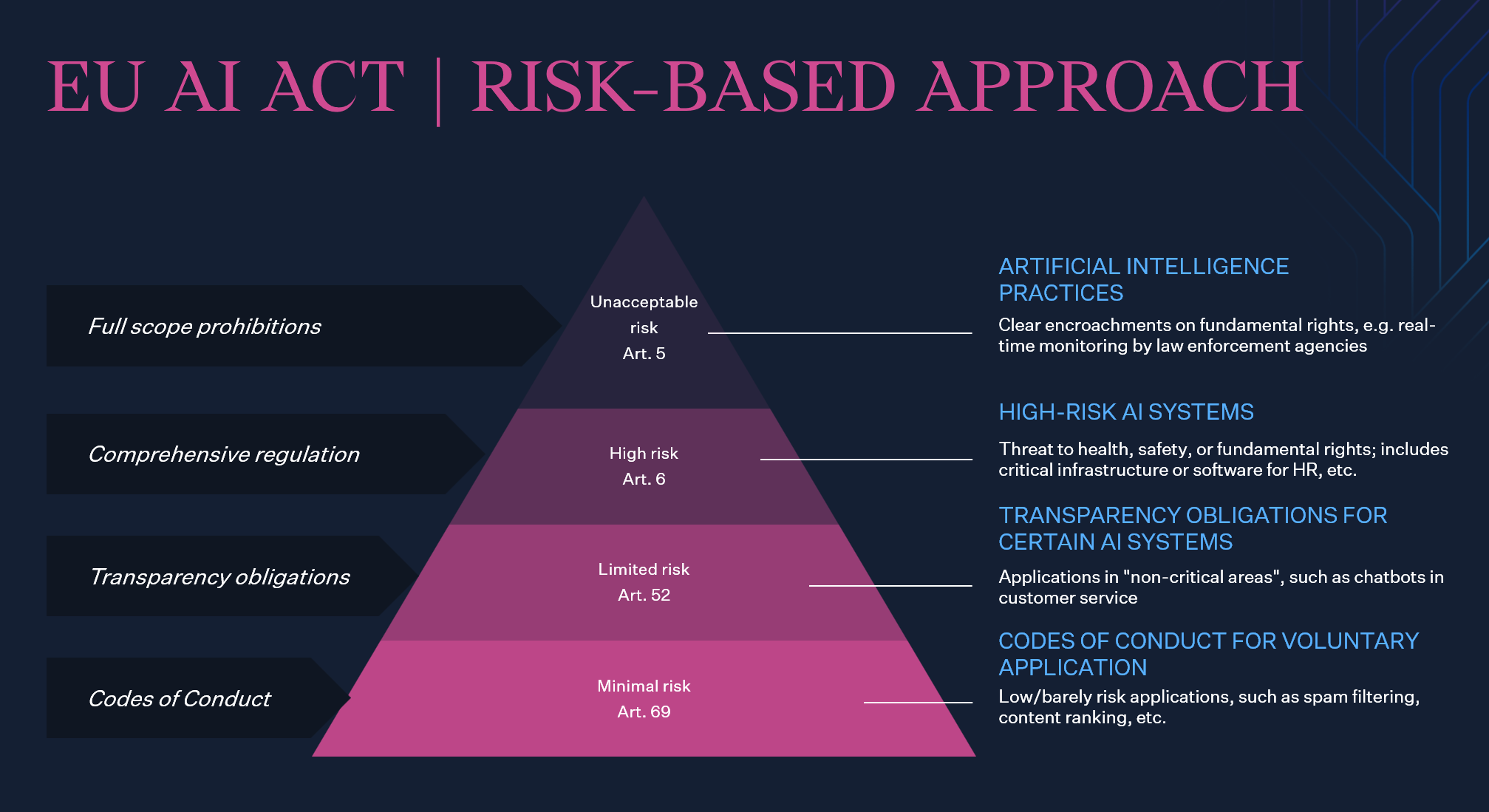

Different Rules for Different Risk Levels

The new rules establish obligations for providers and users depending on the level of risk from AI. While many AI systems pose minimal risk, they need to be assessed. For AI systems classified as high risk (due to their significant potential harm to health, safety, fundamental rights, environment, democracy and the rule of law), strict obligations will apply. This includes rules on mandatory Fundamental Rights Impact Assessments, as well as (among others) Conformity Assessments, data governance requirements, registration in an EU database, risk management and quality management systems, transparency, human oversight, accuracy, robustness and cyber security. Examples of such systems include certain medical devices, recruitment, HR and worker management tools, and critical infrastructure management (e.g. water, gas, electricity etc.).

(Image taken from here)

Unacceptable risk: Unacceptable AI systems are systems considered a threat to people and are prohibited although some exceptions may be allowed for law enforcement purposes. Unacceptable AI systems include:

- Cognitive behavioural manipulation of people or specific vulnerable groups: for example voice-activated toys that encourage dangerous behaviour in children

- Social scoring: classifying people based on behaviour, socio-economic status or personal characteristics

- Biometric identification and categorisation of people

- Real-time and remote biometric identification systems, such as facial recognition

High risk AI systems are those that negatively affect safety or fundamental rights will be considered high risk and will be divided into two categories:

1) AI systems that are used in products falling under the EU’s product safety legislation. This includes toys, aviation, cars, medical devices and lifts.

2) AI systems falling into specific areas that will have to be registered in an EU database e.g:

- Management and operation of critical infrastructure

- Education and vocational training

- Employment, worker management and access to self-employment

- Access to and enjoyment of essential private services and public services and benefits

- Law enforcement

- Migration, asylum and border control management

- Assistance in legal interpretation and application of the law.

All high-risk AI systems will require extensive governance activities, including conformity assessments, to ensure compliance before being put on the market and also throughout their lifecycle.

General purpose and generative AI: Generative AI, like ChatGPT, would have to comply with transparency requirements:

- Disclosing that the content was generated by AI

- Designing the model to prevent it from generating illegal content

- Publishing summaries of copyrighted data used for training

High-impact general-purpose AI models that might pose systemic risk, such as the more advanced AI model GPT-4, would have to undergo thorough evaluations and any serious incidents would have to be reported to the European Commission.

Limited risk AI systems should comply with minimal transparency requirements that would allow users to make informed decisions e.g. users should be aware they’re interacting with an AI system. After interacting with the applications, the user can then decide whether they want to continue using it. This includes AI systems that generate or manipulate image, audio or video content, for example deepfakes.

Minimal risk or no risk AI systems may be deployed without additional restrictions. Providers of such systems may choose to apply the requirements for trustworthy AI and adhere to voluntary codes of conduct.

A New Regulator

The EU institutions agreed on establishing new administrative infrastructures including:

- An AI Office, which will sit within the Commission and will be tasked with overseeing the most advanced AI models, contributing to fostering new standards and testing practices, and enforcing the common rules in all EU member states. It seems likely this will become equivalent to the AI Safety Institutes that have recently been announced to be established in the UK and the US;

- A scientific panel of independent experts, which will advise the AI Office about GPAI models and on the emergence of high-impact GPAI models, contribute to the development of methodologies for evaluating the capabilities of foundation models and monitor possible material safety risks related to foundation models;

- An AI Board, which comprises EU member states’ representatives, will remain as a coordination platform and an advisory body to the Commission while contributing to the implementation of the EU AI Act (e.g. designing codes of practice); and

- An advisory forum for stakeholders will be set up to provide technical expertise to the AI Board.

Penalties for non-compliance with the rules will lead to fines ranging from €7.5 million or 1.5% of global turnover to €35 million or 7% of global turnover, depending on the infringement and size of the company.

Preparing for the EU AI Act

While waiting for the EU AI Act to be formally adopted (expected Spring 2024) and to become fully applicable (18 -24 month implementation period), organisations using or planning to use AI systems should start addressing impacts by mapping their processes and assessing the level of compliance of their AI systems with the new rules. The EU AI Act is the first formal legislation to begin to fill in the gaps of ethical and regulatory principles to which organisations must adhere when deploying AI.

Implementing an AI governance strategy should be the starting point. A robust strategy must be aligned with business objectives and identify areas within the business where AI will most benefit the organisation’s strategic goal. It will also require full alignment with the initiatives aimed at managing personal and non-personal data assets, in compliance with existing legislation.

Beyond that, implementation of a framework of policies and processes aimed at ensuring that only compliant developers are onboarded and/or models developed or deployed should be considered. Risks should be properly identified and mitigated, ensuring adequate monitoring and supervision throughout the AI system lifecycle. Measures ranging from internal training to market surveillance will be key. These can likely be developed from existing risk management processes – in particular, data protection risk assessments, supplier due diligence and audits.

Finally, consider the approach globally. The EU AI Act is a leader but it will not be the only global law developed to address AI risks and promote trust. Any truly global strategy will need to accommodate the key requirements and principles of the EU AI Act but also be forward-looking in how other regulatory requirements may develop.