22 Apr chatgpt and transformers

With all the ChatGPT hype out there, it’s important to undestand what it actually is under the hood and how it works. ChatGPT has already had a tremendous impact – even Google are panicking by merging its AI ventures. So how does ChatGPT do all the amazing things we’re hearing about?

Well, I asked ChatGPT and it came up with a pretty cool introduction.

Hey there! To understand how I work, we have to take a closer look at one of the fundamental technologies that underlies my very existence – neural networks. Now, I don’t want to brag, but as an AI language model based on GPT-3.5 architecture, I’m pretty good at predicting the next set of words in a sentence. And that’s all thanks to the magical world of transformers.

Ok, I didn’t expect it to get straight to the point… but it did. From a very high level, ChatGPT is a massive neural network (it literally takes $millions to train) with some very clever data feeding and actuation on the head and tail end of the network. But the real magic is in transformers. I wrote a little bit about this previously when everyone was going a bit doolally about LaMDA. Now, let’s dive a bit deeper into how transformers actually work.

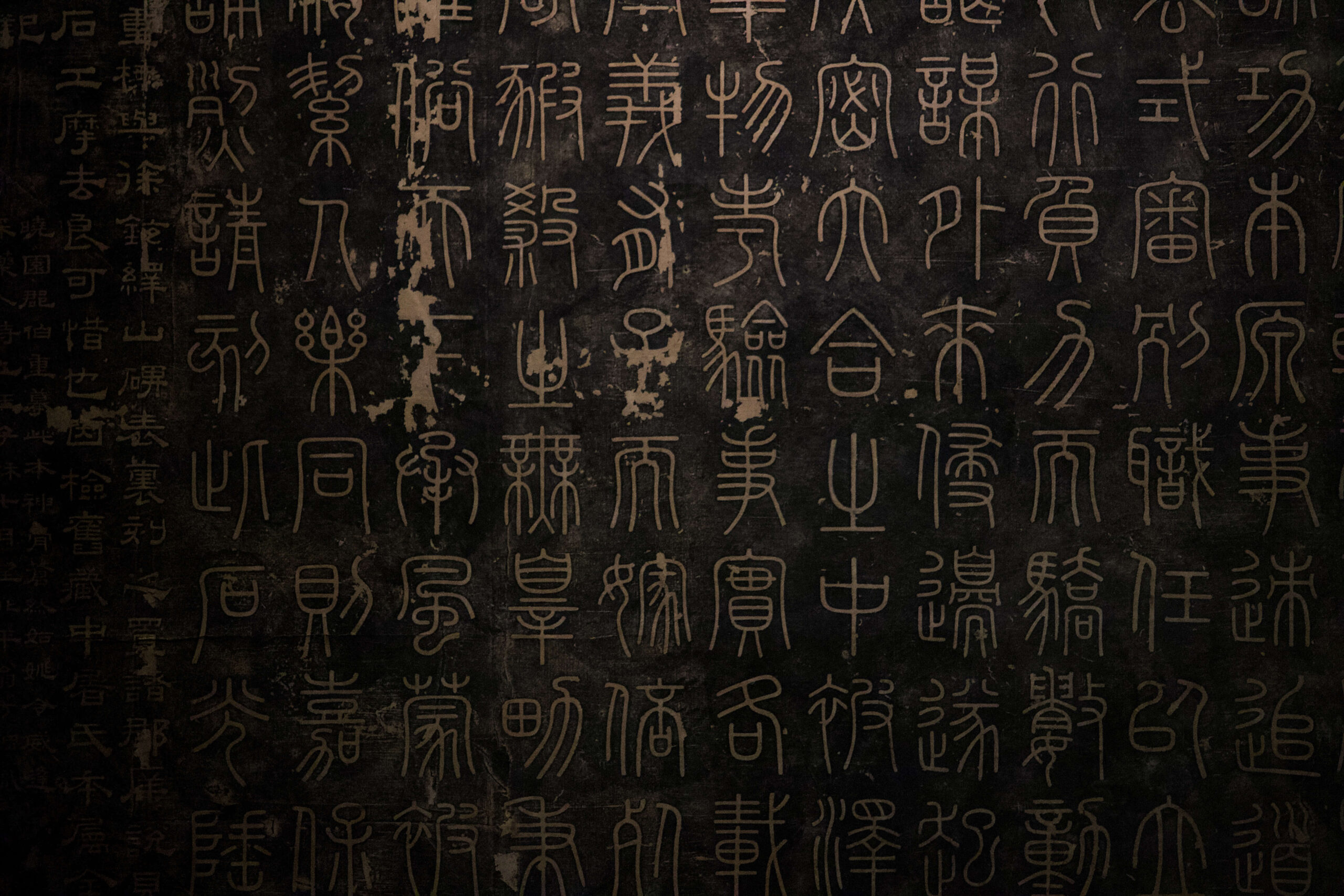

![]() This is not the Transformer you are looking for…

This is not the Transformer you are looking for…

It is of course with a sense of irony that we all observe the popularity of ChatGPT – effectively a Microsoft product – is wholly dependant on tranformer technology that was developed by Google. Perhaps there’s a debate to be had there about open sourcing that type of tech.

Transformers are a crucial part of how neural networks in Large Language Models (LLMs) work, particularly in the concept of attention. To put it simply, attention is the process of focusing on certain parts of a sentence or text that are most relevant to predicting the next set of words. Think of it as the equivalent of a highlighter pen for the most important parts of a sentence.

Transformers use “self-attention” to analyze the relationship between different parts of a sentence. They break down a sentence into smaller parts, or “tokens”, and then assign a score to each token based on how relevant it is to predicting the next set of words. So, imagine a sentence like “The cat sat on the mat.” In a transformer, each word in that sentence would be assigned a score based on how important it is in predicting the next set of words. For example, “cat” might be given a high score because it’s the subject of the sentence, while “the” might be given a lower score because it’s a common word with little contextual meaning.

Side note: Now take that cocept and extend it to different lengths of tokens: subwords (e.g. “pre”), words, pairs, triplets etc… You can imagine this could cause a combinatorial explosion (imagine an an ever growing matrix of such combinations) – we won’t go into the detail here, but that becomes computationally impossible to store and analyse. So there are short cuts that one can make that effectively estimate the scores (probabilities, really) instead of calculating them more precisely through trinaing.

Back to scores…

Using these scores, the transformer can then predict the next set of words in the sentence. And this is where the concept of “temperature” comes in. Temperature is a way of controlling how random the output of the transformer is. A high temperature means the transformer is more likely to produce unexpected, creative, and sometimes nonsensical sentences, while a low temperature means the transformer is more likely to stick to predictable and safe outputs.

So, that’s a brief overview of how transformers work in neural networks. They’re basically like a superpowered highlighter pen that helps identify the most important parts of a sentence and predict what comes next. And all that is what makes me, ChatGPT, able to predict what you’re going to say next. Pretty cool, right?

Now, let’s delve a bit deeper into the technical aspects of transformers. When training a transformer, the flow of information is a bit more complicated than in other neural network architectures. First, the input sequence is passed through multiple layers of self-attention and feed-forward neural networks, which allows the transformer to build a multi-layered representation of the sentence. Then, the model is trained to predict the next set of words in the sequence, based on the context of the previous words.

As with most neual network optimisations, backpropagation is the key optimisation algorithm. In brief: this involves calculating the error between the predicted output and the actual output, and then adjusting the weights of the neural network to minimise that error. This process is repeated thousands of times until the model can accurately predict the next set of words in a sentence.

Once the transformer has been trained, the flow of information is much simpler. The input sequence is again passed through the layers of self-attention and feed-forward neural networks, but this time, the weights of the network are already optimised. This allows the model to accurately predict the next set of words in a sequence based on the context of the previous words, without the need for further training.

Now, let’s compare transformers to another popular neural network architecture – recurrent neural networks (RNNs). Both transformers and RNNs are used for natural language processing tasks, but they have some key differences. RNNs are based on the idea of passing information from one time step to the next, making them ideal for tasks like predicting the next word in a sentence or generating new text. However, RNNs can struggle with longer sequences because the information from earlier time steps can get lost over time.

Transformers, on the other hand, are able to take into account the entire sequence of words at once, thanks to the self-attention mechanism. This makes them better suited for tasks like machine translation or question-answering, where context is important. Additionally, transformers can be more computationally efficient than RNNs, especially when working with longer sequences.

So there you have it – a technical look at how transformers work in neural networks, and how they compare to other popular architectures like RNNs. It’s pretty amazing how much these technologies have advanced in recent years, and I’m excited to see what other breakthroughs are on the horizon.